A First Look at Docker

Published:

Docker is a set of tools that deliver software in isolated packages called containers that bundle their software, libraries and configuration.

Outline

- Introduction

- Create and Build Container Image

- Run the Image

- Create Docker Compose File

- Push Project to a GitHub Repository

- Publish to GitHub Container Registry

Introduction

Docker is a set of tools that use OS-level virtualization to deliver software in isolated packages called containers. Containers bundle their own software, libraries and configuration files. They communicate with each other through well-defined channels and use fewer resources than virtual machines.

The code for this article is available on my GitHub and the container image can be found on the GitHub Container Registry and Docker Hub.

Create Node Project

We will create a simple Node application with Express that returns an HTML fragment.

Initialize Project and Install Dependencies

mkdir ajcwebdev-dockercd ajcwebdev-dockernpm init -ynpm i expresstouch index.jsCreate Server

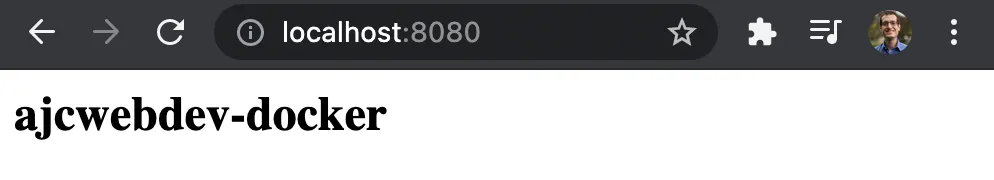

Enter the following code into index.js.

const express = require("express")const app = express()

const PORT = 8080const HOST = '0.0.0.0'

app.get('/', (req, res) => { res.send('<h2>ajcwebdev-docker</h2>')})

app.listen(PORT, HOST)console.log(`Running on http://${HOST}:${PORT}`)Run Server

node index.jsListening on port 8080

Create and Build Container Image

You’ll need to build a Docker image of your app to run this app inside a Docker container using the official Docker image. We will need two files: Dockerfile and .dockerignore.

Create Dockerfile and dockerignore files

Docker can build images automatically by reading the instructions from a Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build users can create an automated build that executes several command-line instructions in succession.

touch DockerfileThe FROM instruction initializes a new build stage and sets the Base Image for subsequent instructions. A valid Dockerfile must start with FROM. The first thing we need to do is define from what image we want to build from. We will use version 14-alpine of node available from Docker Hub because the universe is chaos and you have to pick something so you might as well pick something with a smaller memory footprint.

FROM node:14-alpineThe LABEL instruction is a key-value pair that adds metadata to an image.

LABEL org.opencontainers.image.source https://github.com/ajcwebdev/ajcwebdev-dockerThe WORKDIR instruction sets the working directory for our application to hold the application code inside the image.

WORKDIR /usr/src/appThis image comes with Node.js and NPM already installed so the next thing we need to do is to install our app dependencies using the npm binary. The COPY instruction copies new files or directories from <src>. The COPY instruction bundles our app’s source code inside the Docker image and adds them to the filesystem of the container at the path <dest>.

COPY package*.json ./The RUN instruction will execute any commands in a new layer on top of the current image and commit the results. The resulting committed image will be used for the next step in the Dockerfile. Rather than copying the entire working directory, we are only copying the package.json file. This allows us to take advantage of cached Docker layers.

RUN npm iCOPY . ./The EXPOSE instruction informs Docker that the container listens on the specified network ports at runtime. Our app binds to port 8080 so you’ll use the EXPOSE instruction to have it mapped by the docker daemon.

EXPOSE 8080Define the command to run the app using CMD which defines our runtime. The main purpose of a CMD is to provide defaults for an executing container. Here we will use node index.js to start our server.

CMD ["node", "index.js"]Our complete Dockerfile should now look like this:

FROM node:14-alpineLABEL org.opencontainers.image.source https://github.com/ajcwebdev/ajcwebdev-dockerWORKDIR /usr/src/appCOPY package*.json ./RUN npm iCOPY . ./EXPOSE 8080CMD [ "node", "index.js" ]Before the docker CLI sends the context to the docker daemon, it looks for a file named .dockerignore in the root directory of the context. Create a .dockerignore file in the same directory as our Dockerfile.

touch .dockerignoreIf this file exists, the CLI modifies the context to exclude files and directories that match patterns in it. This helps avoid sending large or sensitive files and directories to the daemon.

node_modulesDockerfile.dockerignore.git.gitignorenpm-debug.logThis will prevent our local modules and debug logs from being copied onto our Docker image and possibly overwriting modules installed within our image.

Build Project

The docker build command builds an image from a Dockerfile and a “context”. A build’s context is the set of files located in the specified PATH or URL. The URL parameter can refer to three kinds of resources:

- Git repositories

- Pre-packaged tarball contexts

- Plain text files

Go to the directory with your Dockerfile and build the Docker image.

docker build . -t ajcwebdev-dockerThe -t flag lets you tag your image so it’s easier to find later using the docker images command.

List Images

Your image will now be listed by Docker. The docker images command will list all top level images, their repository and tags, and their size.

docker imagesREPOSITORY TAG IMAGE ID CREATED SIZE

ajcwebdev-docker latest cf27411146f2 4 minutes ago 118MBRun the Image

Docker runs processes in isolated containers. A container is a process which runs on a host. The host may be local or remote.

Run Docker Container

When an operator executes docker run, the container process that runs is isolated in that it has its own file system, its own networking, and its own isolated process tree separate from the host.

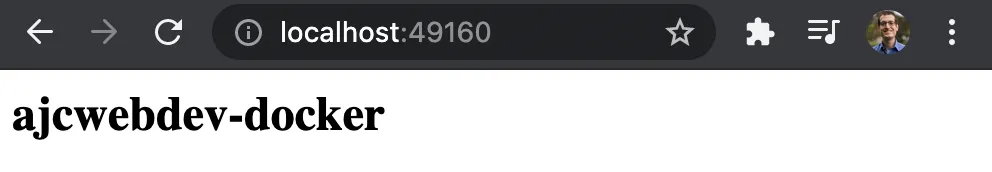

docker run -p 49160:8080 -d ajcwebdev-docker-d runs the container in detached mode, leaving the container running in the background. The -p flag redirects a public port to a private port inside the container.

List Containers

To test our app, get the port that Docker mapped:

docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d454a8aacc28 ajcwebdev-docker "docker-entrypoint.s…" 13 seconds ago Up 11 seconds 0.0.0.0:49160->8080/tcp, :::49160->8080/tcp sad_keplerPrint Output of App

docker logs <container id>Running on http://0.0.0.0:8080Docker mapped the 8080 port inside of the container to the port 49160 on your machine.

Call App using curl

curl -i localhost:49160HTTP/1.1 200 OK

X-Powered-By: ExpressContent-Type: text/html; charset=utf-8Content-Length: 25ETag: W/"19-iWXWa+Uq4/gL522tm8qTMfqHQN0"Date: Fri, 16 Jul 2021 18:48:54 GMTConnection: keep-aliveKeep-Alive: timeout=5

<h2>ajcwebdev-docker</h2>

Create Docker Compose File

Compose is a tool for defining and running multi-container Docker applications. After configuring our application’s services with a YAML file, we can create and start all our services with a single command.

touch docker-compose.ymlDefine the services that make up our app in docker-compose.yml so they can be run together in an isolated environment.

version: "3.9"services: web: build: . ports: - "49160:8080"Create and Start Containers

Stop your currently running container before running the next command or the port will be in use.

docker stop <container id>The docker compose up command aggregates the output of each container. It builds, (re)creates, starts, and attaches to containers for a service.

docker compose upAttaching to web_1web_1 | Running on http://0.0.0.0:8080Push Project to a GitHub Repository

We can publish this image to the GitHub Container Registry with GitHub Packages. This will require pushing our project to a GitHub repository. Before initializing Git, create a .gitignore file for node_modules and our environment variables.

echo 'node_modules\n.DS_Store\n.env' > .gitignoreIt is a good practice to ignore files containing environment variables to prevent sensitive API keys being committed to a public repo. This is why I have included .env even though we don’t have a .env file in this project right now.

Initialize Git

git initgit add .git commit -m "I can barely contain my excitement"Create a New Repository

You can create a blank repository by visiting repo.new or using the gh repo create command with the GitHub CLI. Enter the following command to create a new repository, set the remote name from the current directory, and push the project to the newly created repository.

gh repo create ajcwebdev-docker \ --public \ --source=. \ --remote=upstream \ --pushIf you created a repository from the GitHub website instead of the CLI then you will need to set the remote and push the project with the following commands.

git remote add origin https://github.com/ajcwebdev/ajcwebdev-docker.gitgit push -u origin mainPublish to GitHub Container Registry

GitHub Packages is a platform for hosting and managing packages that combines your source code and packages in one place including containers and other dependencies. You can integrate GitHub Packages with GitHub APIs, GitHub Actions, and webhooks to create an end-to-end DevOps workflow that includes your code, CI, and deployment solutions.

GitHub Packages offers different package registries for commonly used package managers, such as npm, RubyGems, Maven, Gradle, and Docker. GitHub’s Container registry is optimized for containers and supports Docker and OCI images.

Login to ghcr

To login, create a PAT (personal access token) with the ability to upload packages to GitHub Package Registry. Include your key instead of xxxx.

export CR_PAT=xxxxLogin with your own username in place of ajcwebdev.

echo $CR_PAT | docker login ghcr.io -u ajcwebdev --password-stdinTag Image

docker tag ajcwebdev-docker ghcr.io/ajcwebdev/ajcwebdev-dockerPush to Registry

docker push ghcr.io/ajcwebdev/ajcwebdev-docker:latestPull Image from Registry

To test that our project has a docker image published to a public registry, pull it from your local development environment.

docker pull ghcr.io/ajcwebdev/ajcwebdev-dockerUsing default tag: latestlatest: Pulling from ajcwebdev/ajcwebdev-dockerDigest: sha256:3b624dcaf8c7346b66af02e9c31defc992a546d82958cb067fb6037e867a51e3Status: Image is up to date for ghcr.io/ajcwebdev/ajcwebdev-docker:latestghcr.io/ajcwebdev/ajcwebdev-docker:latestThis article only covers using Docker for local development. However, we could take this exact same project and deploy it to various other container services offered by cloud platforms.

Examples of this would include AWS Fargate or Google Cloud Run. There are also services such as Fly and Flightcontrol that provide higher level abstractions for deploying and hosting your containers.